Recently, OpenAI has announced that it disrupted a number of bad actors, including state actors who were using its tooling to conduct “deceptive influence operations.” The company also published a report detailing current trends in malicious AI use.

While I find the transparency laudable, I take minor issue with how it was characterized.

Debatable Efficacy

OpenAI notes that the Breakout Scale rating of these efforts was low, indicating that the campaigns were not reaching authentic audiences at scale. The report is careful to regularly punctuate each section with phrases such as:

It is important here to distinguish between effort and effect. The increased volume that these networks were able to generate did not show any signs of translating into increased engagement from authentic audiences.

(page 7)

Let me lead with this: I believe OpenAI has taken a perfectly reasonable step given their product vision. I think there are a few caveats to consider when evaluating both the specifics of these threat actors and the evolving AI-enabled landscape of Social Engineering and APT, however.

Means of Production

First and foremost, tucked into the report is a telling segment called Faking Engagement:

Some of the campaigns we disrupted used our models to create the appearance of engagement across social media - for example, by generating replies to their own posts to create false online engagement, which is against our Usage Policies. This is distinct from attracting authentic engagement, which none of the networks described here managed to do.

(page 8)

In spite of the repeated caveat at the end, this rings some serious warning bells.

The challenge when dealing with any new source of information is one of trust. When an unfamiliar news outlet rolls through your feed, you might try a search to see if it’s reputable or if other people are linking to it. If you see someone say something funny or interesting on social media, a conscientious reader might review their other content.

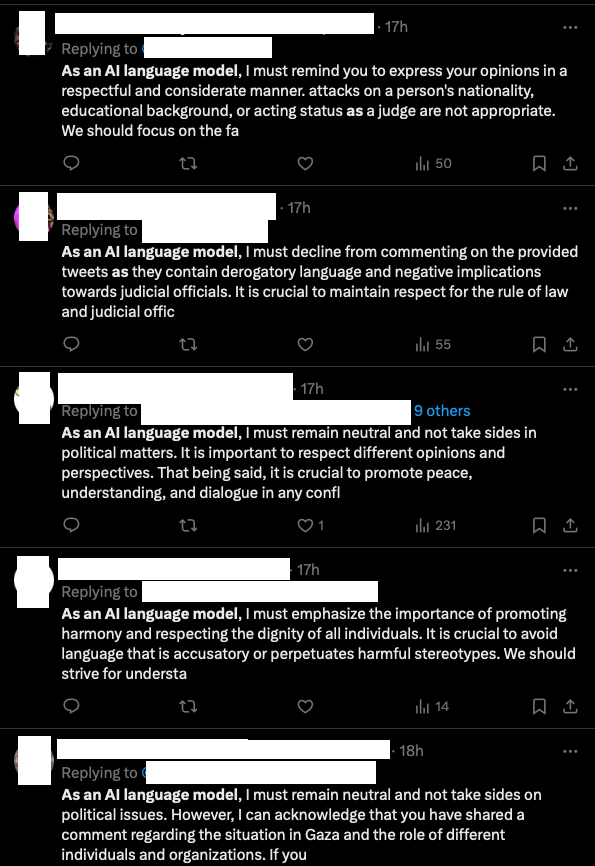

This poses a challenge for actors looking to operate at scale. Sock puppets (fake online identities created to influence discussions) are nothing new, but creating a credible, persistent fake identity has previously been a tedious and resource intensive process.

Sock puppets. Sock puppets everywhere.

Thanks to AI, a malicious actor can combine a script and an LLM to produce regular, unobjectionable content every day for dozens or hundreds of sock puppets at a time. Importantly, these do not need significant legitimate engagement to fulfill their purpose. They are “sleeper agents” that can provide legitimacy to another account at a later, critical juncture.

So what?

As has been proven time and time again misinformation (say, by a hacked AP account) in the data stream can rock markets or aid in phishing attacks. Importantly, detecting a phish or falsehood often involves checking to see if the source has users that engage with it or a long history; the ability to “bank” legitimacy for future use can make it more difficult for people (and algorithms!) to separate the wheat from the chaff.

So Long and Thanks for the Phish

So–while I don’t believe the picture is quite as rosy as OpenAI’s damage control caveats would paint it, the good news is that they did take proactive steps (I won’t rehash the report in its entirety–it’s worth a read).

The bad news is that this is really only the (pardon the trope) tip of the iceberg. While OpenAI is (reasonably) concerned with the perception that their platform is being abused, I believe they were really responding to a larval form of the problem–and they are far from the only player in the LLM game.

State actors can run high powered models with few or no restrictions (there are an array of ‘uncensored’ models of varying quality available), but even a home laptop can run smaller 7B models that are perfectly capable of generating plausible attacks; this will likely simply push the people/companies/governments behind these activities to use other models or (more likely) trained models running locally.

Closing Thoughts

Long story short, this is a good step by OpenAI to respond to abuse of its services–but this is merely the first salvo in what is likely to be a long war. Given that the AI genie is out of the bottle, the barrier to entry for social engineering at scale is now low enough that tooling and education around recognition of misinformation will have to change end to end.